There are several things I can think of, such as clean code, DRY methods, integration testing, the technology being used, great design… Still, if I had to pick between losing all of those, or just the data, almost all of my answers would be: “Protect the data”.

Application code and design are generated by a limited number of people, and we can be fairly certain of who they are. On the other hand, application data is generated by the application’s users. The number of application users tends to be much larger (fingers crossed). Not to mention that data accumulates over time. For any successful application - data (if or when lost) can not be recreated.

Still, Rails is notorious for its bad relationship with the RDBMS system. Not in the sense that they don’t support it. Rather, in the sense that the ecosystem views things more in a “code comes first” way, with data storage being only secondary. This can be read between the lines in trade-offs like the following:

- You don’t need first class referential constraint support, Rails does it for you

- Let’s add first class support for cascade delete (so you as the developer can test out your code and enjoy the rest of your day)

- Let’s add a brand new abstraction on top of the existing SQL, which we’ll use to fetch data

- Raw SQL is shameful

- We should not use RDBMS to its full capacity, but rather we’ll keep our “code” portable with this abstraction on top of SQL and we’ll use only a common subset

Examples from the wild

One medium sized insurance company managed to forget the “where” part of a fairly simple SQL update script. They ended up resetting ALL invoice amounts to 1 EUR. Thanks to regular backups and transaction logging, they were able to restore their backup and roll back their transactions right up to 1 minute prior to this accidental update.Had they not had both of those, I can’t even begin to imagine what the consequences would have been. Just to clarify things, “Transaction Log” logging is disabled by default in SQL Server and you need to actually know what you are doing and turn it on before disaster strikes.

If you are thinking to yourself, well of course they had backup – they are an insurance company after all – think again.

I also happen to know of a decent sized bank that managed not to create backups of their production data for a whole month. How is that even possible? The person who was supposed to put in the magnetic tape was on a vacation. They ended up “creating” their backups with no tape in the backup machine.

Now you must be thinking – wait, this could never happen in a normal country, you guys over there are crazy. But didn’t Amazon just recently have a huge S3 outage in the US-east region – at the start of March/2017 – due to Amazon employees messing things up?

Amazon announced that the cause of the outage was some inaccurately entered code during a debugging, which caused a large number of servers to be removed.

OK, not a database issue, but still data. My point being – these things do happen. Quite frequently.

Rails Active Admin

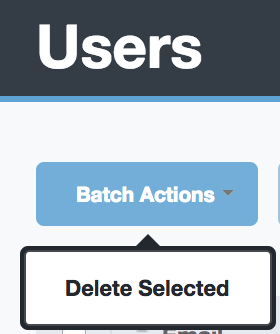

There’s this “nice” and useful option in the Active-Admin gem (it’s the most popular Rails Administration gem with > 1.5M downloads) to bulk delete all entries.

If we were to call this action on the wrong items, some very bad things would happen. In some cases (Rails), we even go out of our way to make sure the “delete” action is clean. So we say, “Oh, you want to delete the user? Let’s delete all data related to this user with these cascade-delete rules. This is nice in theory, but in practice, it is a nightmare. Why would we delete the user along with all of his/her related data? What exactly is the business scenario for this? I can only say that while this might make sense for development, it is a big no-no and a nightmare for production data.

Snake Oil Solutions

When faced with the reality of how severely uncool it is to delete real world data (which tracks events that have happened in real world usage), the knee jerk reaction is to create a “perfect” solution with minimal changes required.

Welcome to Acts As Paranoid, a gem for “soft” delete. It has been downloaded more than 0.5 million times. While it is a great improvement and miles better than just obliterating data, in a way it just further blurs the issue at hand.

For example maybe we want to set certain users as inactive and remove them from a certain drop down list, but is hiding them from the rest of the application by using default scopes really a good enough approach? I feel this just postpones coming up with a proper solution – which is supporting “deactivated” items that are still available to the system, but ignored in the majority of the day to day functioning.

Leaving guns around the house

Personally, I view cascade delete and batch delete as a recipe for data disaster.

That time a drunk Richard Nixon tried to nuke North Korea

The President got on the phone with the Joint Chiefs of Staff and ordered plans for a tactical nuclear strike and recommendations for targets. Henry Kissinger, the National Security Advisor for Nixon at the time, also got on the phone to the Joint Chiefs and got them to agree to stand down on that order until Nixon woke up sober the next morning.

We should always aim to protect our system against these kinds of scenarios.

Worst Case Scenarios

Let’s imagine we’ve got ourselves a system that has users for whom we generate invoices. Let’s try to figure out what would happen if we:

a) Do a batch user delete from Active Admin; b) Execute “User.destroy_all” in the Rails console; c) Execute “DELETE from users” in the RDBMS console.

In an ideal world, we would get referential constraints exceptions complaining for those Users who have invoices.

In those cases, we don’t want to “soft” delete, and we certainly don’t want to cascade delete both the users and the invoices!

We need to limit exposure, we need to hide nuclear keys. The database is a fortress!

Remedies

- Put in motion a backup/restore plan

- a) Make sure you have incremental backups dating back far enough in history

- Remove cascade delete – both for the database and for Rails

- Enforce referential integrity

- Support “deactivation” in favor of “soft” delete

It is like digging a trench around a castle to protect the valuables inside. Make sure if you want to completely remove data from the database it has to be manual, painful, and complicated.

What we want to do is make sure we limit exposure to mistakes that would trigger unwanted deletion, and we want our data storage to protect data at the lowest possible level (RDBMS).

There is no silver bullet, no perfect solution. We still have to turn on our brains and hope for the best. Nevertheless, we can improve our chances by doing the things listed above.